Unjustified True Disbelief

Yesterday I wrote about fake experts and credentialism, but left open the question of how to react. The philosopher Edmund Gettier is famous for presenting a series of problems (known as Gettier cases) designed to undermine the justified true belief account of knowledge. I'm interested in a more pragmatic issue: people rejecting bad science when they don't have the abilities necessary to make such a judgment, or in other words, unjustified true disbelief.1

There are tons of articles suggesting that Americans have recently come to mistrust science: the Boston Review wants to explain How Americans Came to Distrust Science, Scientific American discusses the "crumbling of trust in science and academia", National Geographic asks Why Do Many Reasonable People Doubt Science?, aeon tells us "there is a crisis of trust in science", while the Christian Science Monitor tells us about the "roots of distrust" of the the "anti-science wave".

I got into a friendly argument on twitter about the first article in that list, in which Pascal-Emmanuel Gobry wrote that "normal people do know that "peer-reviewed studies" are largely and increasingly BS". I see two issues with this: 1) I don't think it's accurate, and 2) even if it were accurate, I don't think normal people can separate the wheat from the chaff.

Actual Trust in Science

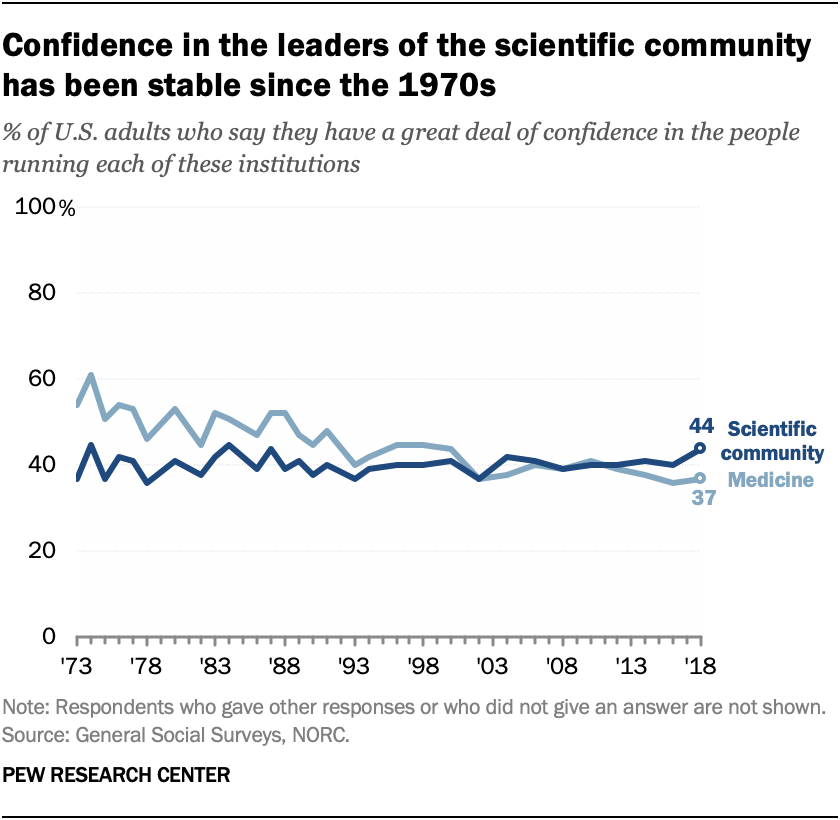

Surveys find that trust in scientists has remained fairly stable for half a century.2

A look at recent data shows an increase in "great deal or fair amount of confidence" in scientists from 76% in 2016 to 86% in 2019. People have not come to distrust science...to which one might reasonably ask, why not? We've been going on about the replication crisis for a decade now, how could this possibly not affect people's trust in science? And the answer to that is that normal people don't care about the replication crisis and don't have the tools needed to understand it even if they did.

Choosing Disbelief

Let's forget that and say, hypothetically, that normal people have come to understand that "peer-reviewed studies are largely and increasingly BS". What alternatives does the normal person have? The way I see it one can choose among three options:

- Distrust everything and become a forest hobo.

- Trust everything anyway.

- Pick and choose by judging things on your own.

Let's ignore the first one and focus on the choice between 2 and 3. It boils down to this: the judgment is inevitably going to be imperfect, so does the gain from doubting false science outweigh the loss from doubting true science? That depends on how good people are at doubting the right things.

There's some evidence that laypeople can distinguish which studies will replicate and which won't, but this ability is limited and in the end relies on intuition rather than an understanding and analysis of the work. Statistical evidence is hard to evaluate: even academic psychologists are pretty bad at the basics. The reasons why vaccines are probably safe and nutrition science is probably crap, the reasons why prospect theory is probably real and social priming is probably fake are complicated! If it was easy to make the right judgment, actual scientists wouldn't be screwing up all the time. Thus any disbelief laypeople end up with will probably be unjustified. And the worst thing about unjustified true disbeliefs is that they also carry unjustified false disbeliefs with them.3

Another problem with unjustified disbelief is that it fails to alter incentives in a useful way. Feedback loops are only virtuous if the feedback is epistemically reliable. (A point relevant to experts as well.)

And what exactly are the benefits from knowing about the replication crisis? So you think implicit bias is fake, what are you going to do with that information? Bring it up at your company's next diversity seminar? For the vast majority of people, beyond the intrinsic value of believing true things there is not much practical value in knowing about weaknesses in science.

Credentials Exist for a Reason

When they work properly, institutional credentials serve an extremely useful purpose: most laymen have no ability to evaluate the credibility of experts (and this is only getting worse due to increasing specialization). Instead, they offload this evaluation to a trusted institutional mechanism and then reap the rewards as experts uncover the secret mechanisms of nature and design better microwave ovens. There is an army of charlatans and mountebanks ready to pounce on anyone straying from the institutionally-approved orthodoxy—just look at penny stock promoters or "alternative" medicine.

Current strands of popular scientific skepticism offer a hint of what we can expect if there was more of it. Is this skepticism directed at methodological weaknesses in social science? Perhaps some valid questions about preregistration and outcome switching in medical trials? Elaborate calculations of the expected value of first doses first vs the risk of fading immunity? No, popular skepticism is aimed at very real and very useful things like vaccination,4 evolution, genetics, and nuclear energy. Most countries in the EU have banned genetically modified crops, for example—a moronic policy that damages not just Europeans, but overflows onto the people who need GMOs the most, African farmers.5 At one point Zambia refused food aid in the middle of a famine because the president thought it was "poison". In the past, shared cultural beliefs were tightly protected; today a kind of cheap skepticism filters down to people who don't know what to do with it and just end up in a horrible mess.

Realistically the alternative to blind trust of the establishment is not some enlightened utopia where we believe the true science and reject the fake experts; the alternative is a wide-open space for bullshit-artists to waltz in and take advantage of people. The practical reality of scientific skepticism is memetic and political, and completely unjustified from an epistemic perspective. Gobry himself once wrote a twitter thread about homeopathy and conspiratorial thinking in France: "the country is positively enamored with pseudo-science." He's right, it is. And that's exactly why we can't trust normal people to make the judgement that "studies largely and increasingly BS".6

The way I see it, the science that really matters also tends to be the most solid.7 The damage caused by "BS studies" seems relatively limited in comparison.

This line of reasoning also applies to yesterday's post on fake experts: for the average person, the choice between trusting a pseudonymous blogger versus trusting an army of university professors with long publication records in prestigious journals is pretty clear. From the inside view I'm pretty sure I'm right, but from the outside view the base rate of correctness among heterodox pseudonymous bloggers isn't very good. I wouldn't trust the damned blogger either! The only way to have even a modicum of confidence is personal verification, and unless you're part of the tiny minority with the requisite abilities, you should have no such confidence. So what are we left with? Helplessness and confusion. "Epistemic hell" doesn't even begin to cover it.

It is true that if you know where to look on the internet, you can find groups of intelligent and insightful generalists who outdo many credentialed experts. For example, many of the people I follow on twitter were (and in some respects still are) literally months ahead of the authorities on Covid-19. @LandsharkRides was only slightly exaggerating when he wrote that "here, in this incredibly small corner of twitter, we have cultivated a community of such incredibly determined autists, that we somehow know more than the experts in literally every single sphere". But while some internet groups of high-GRE generalists tend to be right, if you don't have the ability yourself it's hard to tell them apart from the charlatans.

But what about justified true disbelief?

Kahneman and Tversky came up with the idea of the inside view vs the outside view. The "inside view" is how we perceive our own personal situation, relying on our personal experiences and with the courage of our convictions. The "outside view" instead focuses on common elements, treating our situation as a single observation in a large statistical class.

From the inside view, skeptics of all stripes believe they are epistemically justified and posses superior knowledge. The people who think vaccines will poison their children believe it, the people who think the earth is flat believe it, and the people who doubt social science p=0.05 papers believe it. But the base rate of correctness among them is low, and the errors they make dangerous. How do you know if you're one of the actually competent skeptics with genuinely justified true disbelief? From the inside, you can't tell. And if you can't tell, you're better off just believing everything.

Some forms of disbelief are less dangerous than others. For example if epidemiologists tell you you don't need to wear a mask, but you choose to wear them anyway, there's very little downside if your skepticism is misguided. The reverse (not wearing masks when they tell you to) has a vastly larger downside. But again this relies on an ability to predict and weigh risks, etc.

The one thing we can appeal to is data from the outside: objectively graded forecasts, betting, market trading. And while these tools could quiet your own doubts, realistically the vast majority of people are not going to bother with these sorts of things. (And are you sure you are capable of judging people's objective track records?) Michael A. Bishop argues against fixed notions of epistemic responsibility in In Praise of Epistemic Irresponsibility: How Lazy and Ignorant Can You Be?, instead favoring an environment where "to a very rough first approximation, being epistemically responsible would involve nothing other than employing reliable belief-forming procedures." I'm certainly in favor of that.

So if you're reading this and feel confident in your stats abilities, your generalist knowledge, your intelligence, the quality of your intuitions, and can back those up via an objective track record, then go ahead and disbelieve all you want. But spreading that disbelief to others seems irresponsible to me. Perhaps even telling lay audiences about the replication crisis is an error. Maybe Ritchie should buy up every copy of his book and burn them, for the common good.

That's it?

Yup. Plenty of experts are fake but people should trust them anyway. Thousands of "studies" are just nonsense but people should trust them anyway. On net, less disbelief would improve people's lives. And unless someone has an objective track record showing they know better (and you have the ability to verify and compare it), you should probably ignore the skeptics. Noble lies are usually challenging ethical dilemmas, but this one strikes me as a pretty easy case.

I can't believe I ended up as an establishment shill after all the shit I've seen, but there you have it.8 I'm open to suggestions if you have a superior solution that would allow me to maintain an edgy contrarian persona.

- 1.I am indebted to David A. Oliver for the phrase. As far as I can tell he was the first person to ever use "unjustified true disbelief", on Christmas Eve 2020. ↩

- 2.One might question what these polls are actually measuring. Perhaps they're really measuring if the respondents simply think of themselves (or would like to present themselves) as the type of person who believes in science, regardless of whether they do or not. Perhaps the question of how much regular people "trust scientists" is not meaningful? ↩

- 3.You might be thinking that it's actually not that difficult, but beware the typical mind fallacy. You're reading a blog filled with long rants on obscure metascientific topics, and that's a pretty strong selection filter. You are not representative of the average person. What seem like clear and obvious judgments to you is more or less magic to others. ↩

- 4.It should be noted that when it comes to anti-vax, institutional credentialed people are not exactly blameless. The Lancet published Wakefield and didn't retract his fraudulent MMR-autism paper for 12 years. ↩

- 5.The fear of GMOs is particularly absurd in the light of the alternatives. The "traditional" techniques are based on inducing random mutations through radiation and hoping some of them are good. While mutagenesis is considered perfectly safe and appropriate, targeted changes in plant genomes are terribly risky. ↩

- 6."But, we can educate..." I doubt it. ↩

- 7.Epidemiologists (not to mention bioethicists) during covid-19 provide one of the most significant examples of simultaneously being important and weak. But that one item can't outweigh all the stuff on the other side of the scale. ↩

- 8.Insert Palpatine "ironic" gif here. ↩