Are Experts Real?

Credentials, Expertise, and Feedback Loops

I vacillate between two modes: sometimes I think every scientific and professional field is genuinely complex, requiring years if not decades of specialization to truly understand even a small sliver of it, and the experts1 at the apex of these fields have deep insights about their subject matter. The evidence in favor of this view seems pretty good, a quick look at the technology, health, and wealth around us ought to convince anyone.

But sometimes one of these masters at the top of the mountain will say something so obviously incorrect, something even an amateur can see is false, that the only possible explanation is that they understand very little about their field. Sometimes vaguely smart generalists with some basic stats knowledge objectively outperform these experts. And if the masters at the top of the mountain aren't real, then that undermines the entire hierarchy of expertise.

Real Expertise

Some hierarchies are undeniably legitimate. Chess, for example, has the top players constantly battling each other, new players trying to break in (and sometimes succeeding), and it's all tracked by a transparent rating algorithm that is constantly updated. Even at the far right tails of these rankings, there are significant and undeniable skill gaps. There is simply no way Magnus Carlsen is secretly bad at chess.

Science would seem like another such hierarchy. The people at the top have passed through a long series of tests designed to evaluate their skills and knowledge, winnow out the undeserving, and provide specialized training: undergrad, PhD, tenure track position, with an armada of publications and citations along the way.

Anyone who has survived the torments of tertiary education will have had the experience of getting a broad look at a field in a 101 class, then drilling deeper into specific subfields in more advanced classes, and then into yet more specific sub-subfields in yet more advanced classes, until eventually you're stuck at home on a Saturday night reading an article in an obscure Belgian journal titled "Iron Content in Antwerp Horseshoes, 1791-1794: Trade and Equestrian Culture Under the Habsburgs", and the list of references carries the threatening implication of an entire literature on the equestrian metallurgy of the Low Countries, with academics split into factions justifying or expostulating the irreconcilable implications of rival theories. And then you realize that there's an equally obscure literature about every single subfield-of-a-subfield-of-a-subfield. You realize that you will never be a polymath and that simply catching up with the state of the art in one tiny corner of knowledge is a daunting proposition. The thought of exiting this ridiculous sham we call life flashes in your mind, but you dismiss it and heroically persist in your quest to understand those horseshoes instead.

It is absurd to think that after such lengthy studies and deep specialization the experts could be secret frauds. As absurd as the idea that Magnus Carlsen secretly can't play Chess. Right?

Fake Expertise?

Imagine if tomorrow it was revealed that Magnus Carlsen actually doesn't know how to play chess. You can't then just turn to the #2 and go "oh well, Carsen was fake but at least we have Fabiano Caruana, he's the real deal"—if Carlsen is fake that also implicates every player who has played against him, every tournament organizer, and so on. The entire hierarchy comes into question. Even worse, imagine if it was revealed that Carlsen was a fake, but he still continued to be ranked #1 afterwards. So when I observe extreme credential-competence disparities in science or government bureaucracies, I begin to suspect the entire system.2 Let's take a look at some examples.

59

In 2015, Viechtbauer et al. published A simple formula for the calculation of sample size in pilot studies, in which they describe a simple method for calculating the required N for an x% chance of detecting a certain effect based on the proportion of participants who exhibit the effect. In the paper, they give an example of such a calculation, writing that if 5% of participants exhibit a problem, the study needs N=59 for a 95% probability of detecting the problem. The actual required N will, of course, vary depending on the prevalence of the effect being studied.

If you look at the papers citing Viechtbauer et al., you will find dozens of them simply using N=59, regardless of the problem they're studying, and explaining that they're using that sample size because of the Viechtbauer paper! The authors of these studies are professors at real universities, working in disciplines based almost entirely on statistical analyses. The papers passed through editors and peer reviewers. In my piece on the replication crisis, I wrote that I find it difficult to believe that social scientists don't know what they're doing when they publish weak studies; one of the most common responses from scientists was "no, they genuinely don't understand elementary statistics". It still seems absurd (just count the years from undergrad to PhD, how do you fail to pick this stuff up just by osmosis?) but it also appears to be true. How does this happen? Can you imagine a physicist who doesn't understand basic calculus? And if this is the level of competence among tenured professors, what is going on among the people below them in the hierarchy of expertise?

Masks

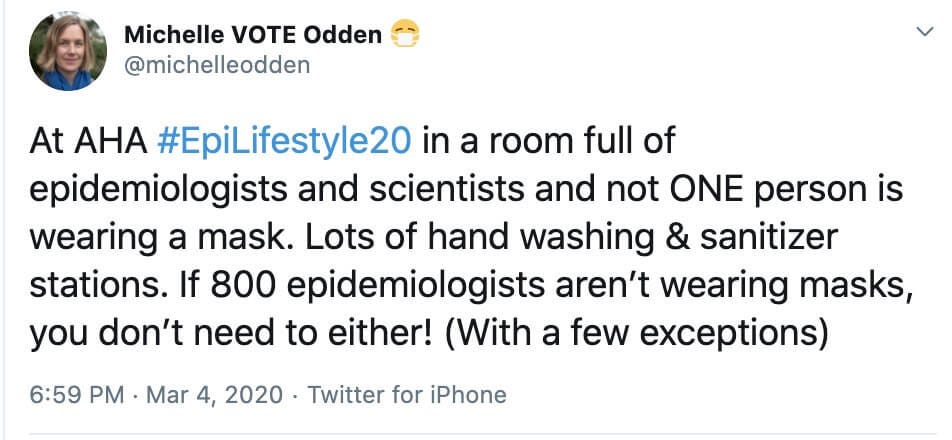

Epidemiologists have beclowned themselves in all sorts of ways over the last year, but this is one of my favorites. Michelle Odden, professor of epidemiology and population health at Stanford (to be fair she does focus on cardiovascular rather than infectious disease, but then perhaps she shouldn't appeal to her credentials):

Time Diversification

CalPERS is the largest pension fund in the United States, managing about $400 billion dollars. Here is a video of a meeting of the CalPERS investment committee, in which you will hear Chief Investment Officer Yu Meng say two incredible things:

That he can pick active managers who will generate alpha, and this decreases portfolio risk.

That infrequent model-based valuation of investments makes them less risky compared to those traded on a market, due to "time diversification".

This is utter nonsense, of course. When someone questions him, he retorts with "I might have to go back to school to get another PhD". The appeal to credentials is typical when fake expertise is questioned. Can you imagine Magnus Carlsen appealing to a piece of paper saying he has a PhD in chessology to explain why he's good?3

Cybernetics

Feedback Loops

It all comes down to feedback loops. The optimal environment for developing and recognizing expertise is one which allows for clear predictions and provides timely, objective feedback along with a system that promotes the use of that feedback for future improvement. Capitalism and evolution work so well because of their merciless feedback mechanisms.4

The basic sciences have a great environment for such feedback loops: if physics was fake, CPUs wouldn't work, rockets wouldn't go to the moon, and so on. But if social priming is fake, well...? There are other factors at play, too, some of them rather nebulous: there's more to science than just running experiments and publishing stuff. Mertonian, distributed, informal community norms play a significant role in aligning prestige with real expertise, and these broader social mechanisms are the keystone that holds everything together. But such things are hard to measure and impossible to engineer. And can such an honor system persist indefinitely or will it eventually be subverted by bad actors?5

What does feedback look like in the social sciences? The norms don't seem to be operating very well. There's a small probability that your work will be replicated at some point, but really the main feedback mechanism is "impact" (in other words, citations). Since citations in the social sciences are not related to truth, this is useless at best. Can you imagine if fake crank theories in physics got as many citations as the papers from CERN? Notorious fraud Brian Wansink racked up 2500 citations in 2020, two years after he was forced to resign. There's your social science feedback loop!

The feedback loops of the academy are also predicated on the current credentialed insiders actually being experts. But if the N=59 crew are making such ridiculous errors in their own papers, they obviously don't have the ability to judge other people's papers either, and neither do the editors and reviewers who allow such things to be published—for the feedback loop to work properly you need both the cultural norms and genuinely competent individuals to apply them.

The candidate gene literature (of which 5-HTTLPR was a subset) is an example of feedback and successful course correction: many years and thousands of papers were wasted on something that ended up being completely untrue, and while a few of these papers still trickle in, these days the approach has been essentially abandoned and replaced by genome-wide association studies. Scott Alexander is rather sanguine about the ability of scientific feedback mechanisms to eventually reach a true consensus, but I'm more skeptical. In psychology, the methodological deficiencies of today are the more or less same ones as those of the of the 1950s, with no hope for change.

Sometimes we can't wait a decade or two for the feedback to work, the current pandemic being a good example. Being right about masks eventually isn't good enough. The loop there needs to be tight, feedback immediate. Blindly relying on the predictions of this or that model from this or that university is absurd. You need systems with built-in, automatic mechanisms for feedback and course correction, subsidized markets being the very best one. Tom Liptay (a superforecaster) has been scoring his predictions against those of experts; naturally he's winning. And that's just one person, imagine hundreds of them combined, with a monetary incentive on top.

Uniformity

There's a superficial uniformity in the academy. If you visit the physics department and the psychology department of a university they will appear very similar: the people working there have the same titles, they instruct students in the same degrees, and publish similar-looking papers in similar-looking journals.6 The N=59 crew display the exact same shibboleths as the real scientists. This similarity provides cover so that hacks can attain the prestige, without the competence, of academic credentials.

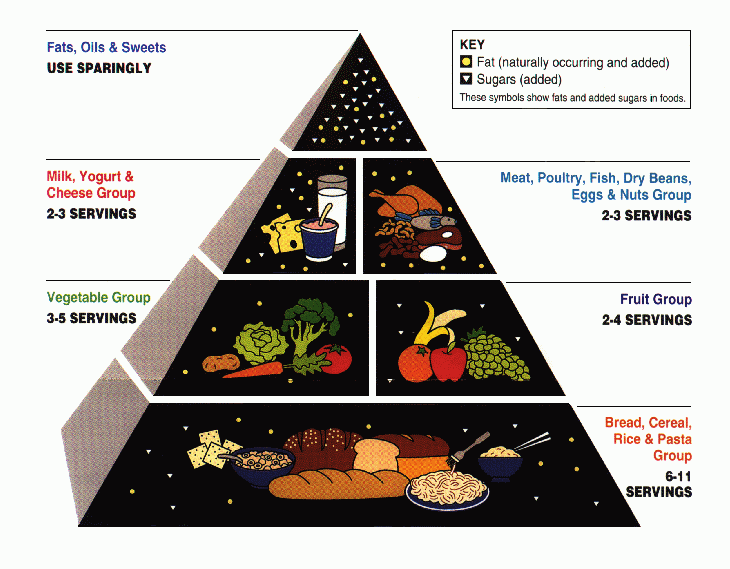

Despite vastly different levels of rigor, different fields are treated with the ~same seriousness. Electrical engineering is definitely real, and the government bases all sorts of policies on the knowledge of electrical engineers. On the other hand nutrition is pretty much completely fake, yet the government dutifully informs you that you should eat tons of cereal and a couple loaves of bread every day. A USDA bureaucrat can hardly override the Scientists (and really, would you want them to?).

This also applies to people within the system itself: as I have written previously the funding agencies seem to think social science is as reliable as physics and have done virtually nothing in response to the "replication crisis" (or perhaps they are simply uninterested in fixing it).

In a recent episode of Two Psychologists Four Beers, Yoel Inbar was talking about the politically-motivated retraction of a paper on mentorship, asking "Who's gonna trust the field that makes their decisions about whether a paper is scientifically valid contingent on whether it defends the moral sensibilities of vocal critics?"7 I think the answer to that question is: pretty much everyone is gonna trust that field. Status is sticky. The moat of academic prestige is unassailable and has little to do with the quality of the research.8 “Anything that would go against World Health Organization recommendations would be a violation of our policy," says youtube—the question of whether the WHO is actually reliable being completely irrelevant. Trust in the scientific community has remained stable for 50+ years. Replication crisis? What's that? A niche concern for a handful of geeks, more or less.

As Patrick Collison put it, "This year, we’ve come to better appreciate the fallibility and shortcomings of numerous well-established institutions (“masks don’t work”)… while simultaneously entrenching more heavily mechanisms that assume their correctness (“removing COVID misinformation”)." A twitter moderator can hardly override the Scientists (and really, would you want them to?).

This is all exacerbated by the huge increase in specialization. Undoubtedly it has benefits, as scientists digging deeper and deeper into smaller and smaller niches allows us to make progress that otherwise would not happen. But specialization also has its downsides: the greater the specialization, the smaller the circle of people who can judge a result. And the rest of us have to take their word for it, hoping that the feedback mechanisms are working and nobody abuses this trust.

It wasn't always like this. A century ago, a physicist could have a decent grasp of pretty much all of physics. Hungarian Nobelist Eugene Wigner expressed his frustration with the changing landscape of specialization:

By 1950, even a conscientious physicist had trouble following more than one sixth of all the work in the field. Physics had become a discipline, practiced within narrow constraints. I tried to study other disciplines. I read Reviews Of Modern Physics to keep abreast of fields like radio-astronomy, earth magnetism theory, and magneto-hydrodynamics. I even tried to write articles about them for general readers. But a growing number of the published papers in physics I could not follow, and I realized that fact with some bitterness.

It is difficult (and probably wrong) for the magneto-hydrodynamics expert to barge into radio-astronomy and tell the specialists in that field that they're all wrong. After all, you haven't spent a decade specializing in their field, and they have. It is a genuinely good argument that you should shut up and listen to the experts.

Bringing Chess to the Academy

There are some chess-like feedback mechanisms even in social science, for example the DARPA SCORE Replication Markets project: it's a transparent system of predictions which are then objectively tested against reality and transparently scored.9 But how is this mechanism integrated with the rest of the social science ecosystem?

We are still waiting for the replication outcomes, but regardless of what happens I don't believe they will make a difference. Suppose the results come out tomorrow and they show that my ability to judge the truth or falsity of social science research is vastly superior to that of the people writing and publishing this stuff. Do you think I'll start getting emails from journal editors asking me to evaluate papers before they publish them? Of course not, the idea is ludicrous. That's not how the system works. "Ah, you have exposed our nonsense, and we would have gotten away with it too if it weren't for you meddling kids! Here, you can take control of everything now." The truth is that the system will not even have to defend itself, it will just ignore this stuff and keep on truckin' like nothing happened. Perhaps constructing a new, parallel scientific ecosystem is the only way out.

On the other hand, an objective track record of successful (or unsuccessful) predictions can be useful to skeptics outside the system.10 In the epistemic maelstrom that we're stuck in, forecasts at least provide a steady point to hold on to. And perhaps it could be useful for generating reform from above. When the scientific feedback systems aren't working properly, it is possible for help to come from outside:11

So what do you do? Stop trusting the experts? Make your own judgments? Trust some blogger who tells you what to believe? Can you actually tell apart real results from fake ones, reliable generalists from exploitative charlatans? Do you have it in you to ignore 800 epidemiologists, and is it actually a good idea? More on this tomorrow.

I'm not talking about pundits or amorphous "elites", but people actually doing stuff.

Sometimes the explanation is rather mundane: institutional incentives or preference falsification make these people deliberately say something they know to be false. But there are cases where that excuse does not apply.

Ever notice how people with "Dr." in their twitter username tend to post a lot of stupid stuff?

A Lysenkoist farmer in a capitalist country is an unprofitable farmer and therefore soon not a farmer at all.

I also imagine it's easier to maintain an honor system with the clear-cut clarity (and rather apolitical nature) of the basic natural sciences compared to the social sciences.

The same features can even be found in the humanities which is just tragicomic.

The stability of academic prestige in the face of fake expertise makes it a very attractive target for political pressure. If you can take over a field, you can start saying anything you want and people will treat you seriously.

Medicine was probably a net negative until the end of the 19th century, but doctors never went away. People continued to visit doctors while they bled them to death and smeared pigeon poop on their feet. Is it that some social status hierarchies are completely impregnable regardless of their results? A case of memetic defoundation perhaps? Were people simply fooled by regression to the mean?

The very fact that such a prediction market could be useful is a sign of weakness. Physicists don't need prediction markets to decide whether the results from CERN are real.

The nice thing about objective track records is that they protect against both credentialed bullshitters and uncredentialed charlatans looking to exploit people's doubts.

Of course it's no panacea, especially in the face of countervailing incentives.

Loved this. You nailed the big idea: real expertise lives where feedback is tight and public, not where robes decide rank.

Chess gives instant truth. You make a bad move, you lose. Clean signal.

Poker is the hard case that proves your point. You can keep making the right choice, the one with the higher chance to win, and still lose for days or weeks, years, even go broke if the short run variance is ugly.

That does not mean the choices were wrong. It means the game pays out with a delay. Over many hands the edge shows up and the player who keeps choosing correctly is the winner.

That maps to messy fields. If feedback is slow or noisy, do what good poker players do. Score the choices, not just the headline outcome.

Practical version:

• Put numbers and dates on claims so we can grade them later

• Keep public track records and calibration scores

• Use replication and prediction markets when natural feedback is slow

• Tie status to those receipts, not to citations or titles

So yes, your argument lands. Where reality grades fast, status follows skill. Where reality grades slow, publish receipts so the right decisions still rise.